Running CoreDNS as a Forwarder in Kubernetes

This post details how I got CoreDNS running as a forwarder in a Kubernetes cluster. There were several problems that stood in the way of this goal:

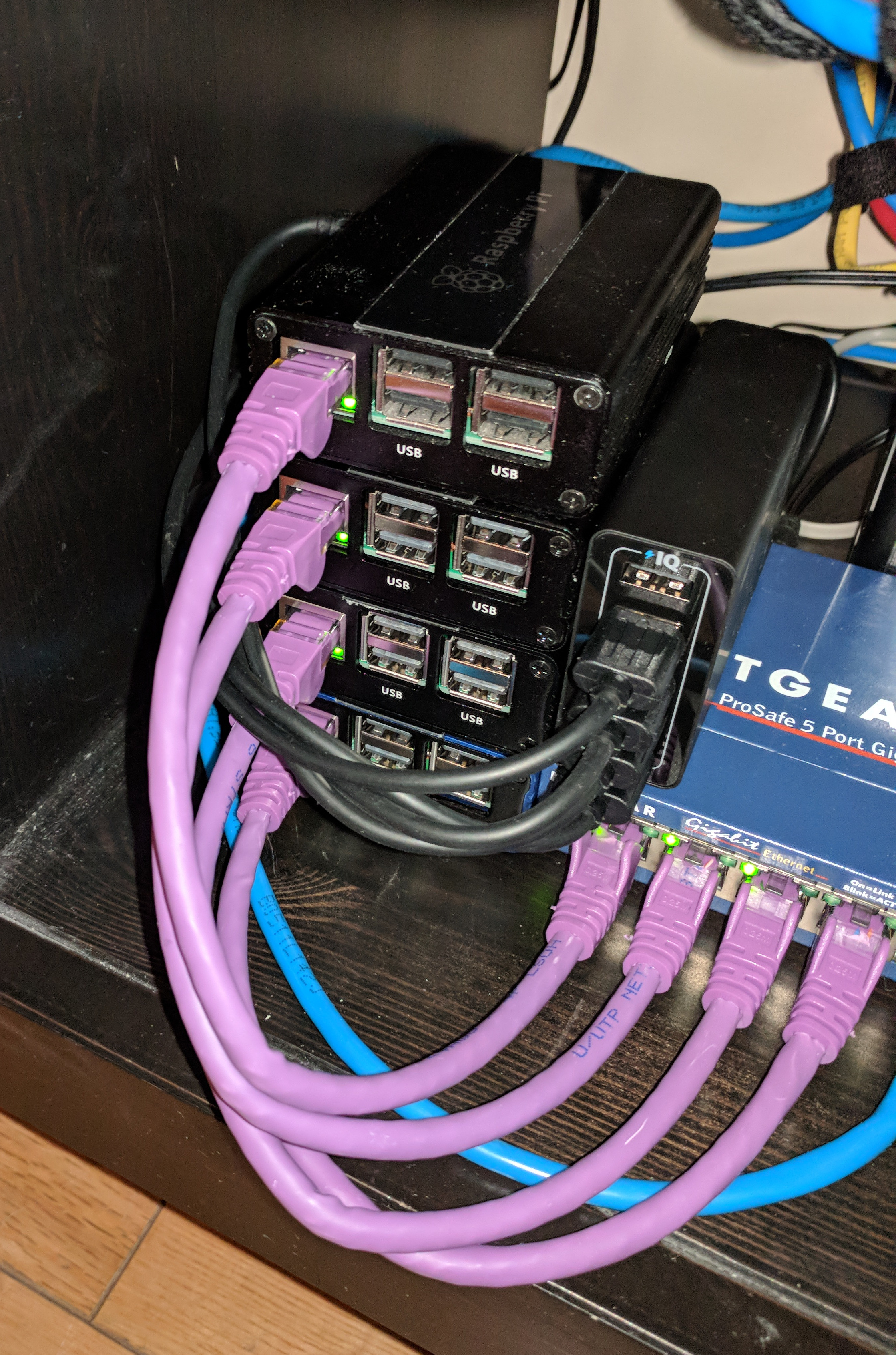

- Having (and building) a cluster out of Raspberry PIs.

- Making load balancing possible in a non-cloud environment.

- Extending CoreDNS with a plugin that could communicate with 9.9.9.9 using DNS-over-TLS.

- Building arm docker containers on amd64.

- Having a (simple) CI system to build (Docker) images and version the k8s manifests.

- No published repository, see various gists referenced in this document.

Cluster⌗

I bought 4 PIs, some nice Corkea cases, an Anker USB power supply and power- and network cables. Then it is just a matter if putting everything together and building the cluster.

For installing Kubernetes I used this excellent guide from hypriot.

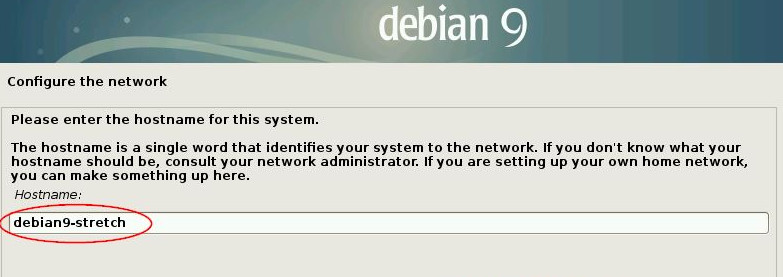

Note some things mentioned there are fixed, i.e. I had no trouble installing flannel. Automatically setting the host names on the flashed SD cards, didn’t work for me though.

After fixing these (minor) issues:

% kubectl get nodes

NAME STATUS ROLES AGE VERSION

master Ready master 1m v1.9.1

node0 Ready <none> 1m v1.9.1

node1 Ready <none> 1m v1.9.1

node2 Ready <none> 1m v1.9.1

One nit: you can’t name a cluster. With Linux install there is always this joyful moment:

No such joy for Kubernetes. I refer to the cluster as carbon in spite of this.

Load Balancing⌗

A colleague (@dave_universetf) started MetalLB. A load balancer for bare metal, great!

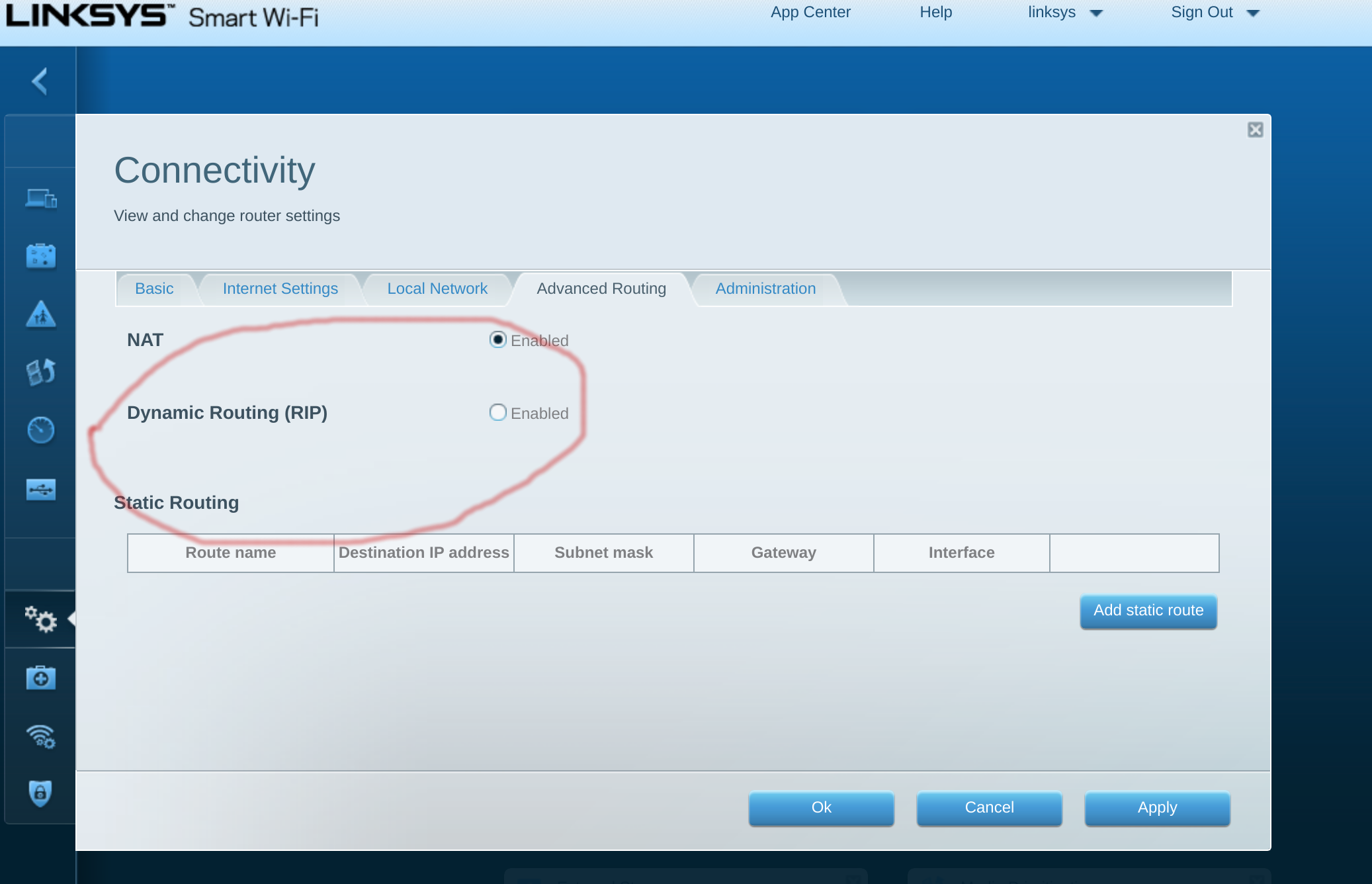

A load balancer that does BGP… but my EA6900 can’t talk that protocol. No worries I see that RIP (RFC 2453) in the configuration, so lets use that.

After implementing the RIP protocol I, sadly, came to the conclusion that my EA does RIP xor NAT for all systems behind it. And, as far as I know, Android can’t do RIP. Back to square one.

Then Dave came up with a wonderful idea: use ARP. And even better, a nice Go library existed.

So I implemented an arp-speaker for MetalLB that would “announce” the service VIP and then let the

kube-proxy handle the delivery to the actual pod(s). This took the better part of a week. But in the

end we had a working implementation where kubectl expose would work with my crappy EA6900.

I think this is quite significant.

Anyone can use Kubernetes load balancing with cheap hardware, at home.

As for the configuration I’m using the default MetalLB manifests, with this config(Map):

apiVersion: v1

kind: ConfigMap

metadata:

namespace: metallb-system

name: config

data:

config: |

address-pools:

- name: my-ip-space

protocol: arp

cidr:

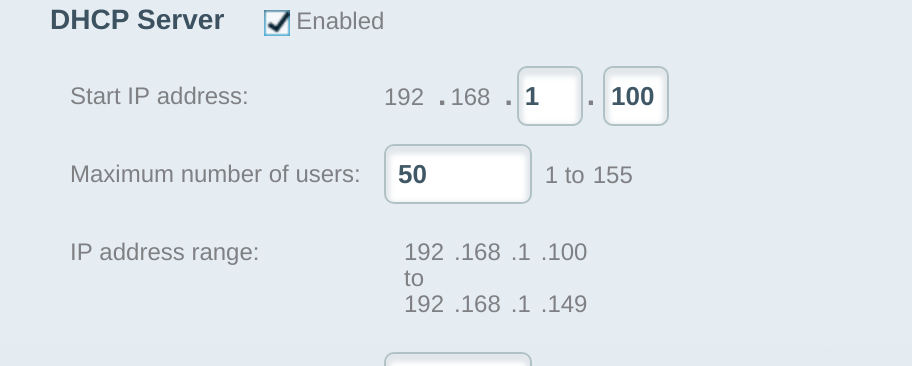

- 192.168.1.240/28

In that config I define that a /28 is to be used for VIPs and I took (some) care that my DHCP range sits below that.

DNS over TLS⌗

So I want to use this cluster to run CoreDNS and use encrypted DNS with an upstream recursor. Just as it happened such a service launched, called Quad9. It uses an anycast VIP (9.9.9.9) and supports DNS-over-TLS.

I “only” needed a forwarder that can speak this protocol as a plugin for CoreDNS. 1

With the following Corefile, CoreDNS can be configured to use this plugin:

. {

forward . tls://9.9.9.9 {

tls_servername dns.quad9.net

health_check 5s

}

cache 30

prometheus

log

errors

}

But this creates a problem, I need TLS certificates to be installed in the Docker container… How

do I get ca-certifcates installed in a docker container (for arm) when I create those on my laptop

(amd64)?

Arm Docker Containers⌗

So how do you create a Docker container for arm with ca-certifcates on amd4, just run apt-get install while building the container, right? No.

Doing that generates an error when building for arm: exec user process caused "exec format error"

Which makes some sense (but I did wish Docker would be able to hide this from you, though). To proceed you need to jump to quite a few hoops, most of them are documented in this article on https://resion.io.

TL;DR: you’ll need to configure binfmt on you host

machine. And have some very wacky /bin/sh switching in the image while building it.

dxbuild was born to streamline some of this. This builds a

builder image that you can inherit from. For amd64 no special hand holding is needed and you can

just use debian:stable-slim, but for arm I built arm32v7/debian-builder:stable-slim. 2

With these builder images cross-platform building works. Together with the manifest-tool any docker client will download the correct image for the architecture it is running on. My Dockerfiles look a bit convoluted now, but that’s a small price to pay.

FROM arm32v7/debian-builder:stable-slim

RUN [ "cross-build-start" ]

RUN apt-get update && apt-get install -y ca-certificates

# ...

RUN [ "cross-build-end" ]

RUN [ "cross-build-clean" ]

But I can now finally build my images: stunnel, coredns, prometheus and node-exporter.

Setting live⌗

MetalLB is working, CoreDNS images are ready, lets deploy! For MetalLB I just followed the instructions on the website. Next deploy CoreDNS:

% kubectl apply -f https://gist.github.com/miekg/85a27e7bd60d5c156c705e8f7eed3a97

% kubectl get pods

coredns-deployment-d46d88f8b-9hj29 1/1 Running 0 9s

coredns-deployment-d46d88f8b-d6k69 1/1 Running 0 9s

coredns-deployment-d46d88f8b-mx6dh 1/1 Running 0 9s

coredns-deployment-d46d88f8b-tlr9x 1/1 Running 0 9s

I’ve configured 192.168.1.252 as my CoreDNS VIP, and voila:

% dig @192.168.1.252 mx miek.nl +noall +answer

miek.nl. 30 IN MX 10 aspmx3.googlemail.com.

miek.nl. 30 IN MX 10 aspmx2.googlemail.com.

miek.nl. 30 IN MX 5 alt1.aspmx.l.google.com.

miek.nl. 30 IN MX 5 alt2.aspmx.l.google.com.

miek.nl. 30 IN MX 1 aspmx.l.google.com.

W00T! It works! But only for UDP…

Continuous Integration⌗

While things are now working, it needs a way to easily deploy and have some versioning for the Kubernetes manifests. All my Dockerfile are now templates:

FROM {{ .Image }}

{{ .CrossBuildStart }}

RUN apt-get -u update && apt-get -uy upgrade

RUN apt-get install -y ca-certificates procps bash

COPY coredns /coredns

{{ .CrossBuildEnd }}

{{ .CrossBuildClean }}

ENTRYPOINT [ "/coredns" ]

A small Go program will for each architecture (arm, arm64, etc.) create the correct image, so all the cross building works. And will either blank out the CrossBuild stuff for amd64 or enable it for the other archictures.

With a Makefile.inc and this tiny Makefile, I can easily build custom CoreDNS Docker images.

VERSION:=0.11

COREDNS:=~/g/src/github.com/coredns/coredns

include ../../Makefile.inc

asset:

cd $(COREDNS); make SYSTEM="GOOS=linux GOARCH=$(arch)"

cp $(COREDNS)/coredns $(arch)/coredns

make ARCH=arm build push will build miek/coredns and push to my docker hub as an arm build.

And then with set image, update the deployment:

% kubectl set image deployment/coredns-deployment coredns=miek/coredns:0.11

The build for prometheus needs a similar tweak, as they have binaries for arm, but no multi-arch Docker containers…

VERSION:=0.4

PROMVERSION=2.0.0

PROMETHEUS:=https://github.com/prometheus/prometheus/releases/download/v$(PROMVERSION)

include ../../Makefile.inc

asset:

case $(arch) in \

arm) curl -L $(PROMETHEUS)/prometheus-$(PROMVERSION).linux-armv7.tar.gz -o $(arch)/prom.tar.gz;; \

*) curl -L $(PROMETHEUS)/prometheus-$(PROMVERSION).linux-$(arch).tar.gz -o $(arch)/prom.tar.gz;; \

esac; \

( cd $(arch); tar --extract --verbose --strip-components 1 --file prom.tar.gz )

Final Setup⌗

I’ve deployed prometheus with a stunnel sidecar so my (remote) Grafana can use this as a data source. Node-exporter is deployed for node metrics, resulting in these bunch of pods (still want to put the monitoring in its own namespace and setup RBAC for it).

% kubectl get pod

NAME READY STATUS RESTARTS AGE

coredns-deployment-d46d88f8b-9hj29 1/1 Running 0 3d

coredns-deployment-d46d88f8b-d6k69 1/1 Running 0 3d

coredns-deployment-d46d88f8b-mx6dh 1/1 Running 0 3d

coredns-deployment-d46d88f8b-tlr9x 1/1 Running 0 3d

monitoring-deployment-55fd495dd5-kq8tw 2/2 Running 0 12d

node-exporter-28bxf 1/1 Running 0 6d

node-exporter-8rcvz 1/1 Running 0 6d

node-exporter-vpb8k 1/1 Running 0 6d

node-exporter-z9chd 1/1 Running 0 6d

zsh-deployment-5798bb5648-8vdtw 1/1 Running 0 16d

The zsh-deployment is there to have in-cluster pod that can be used for debugging, sort of

a toolbox on a cluster level.

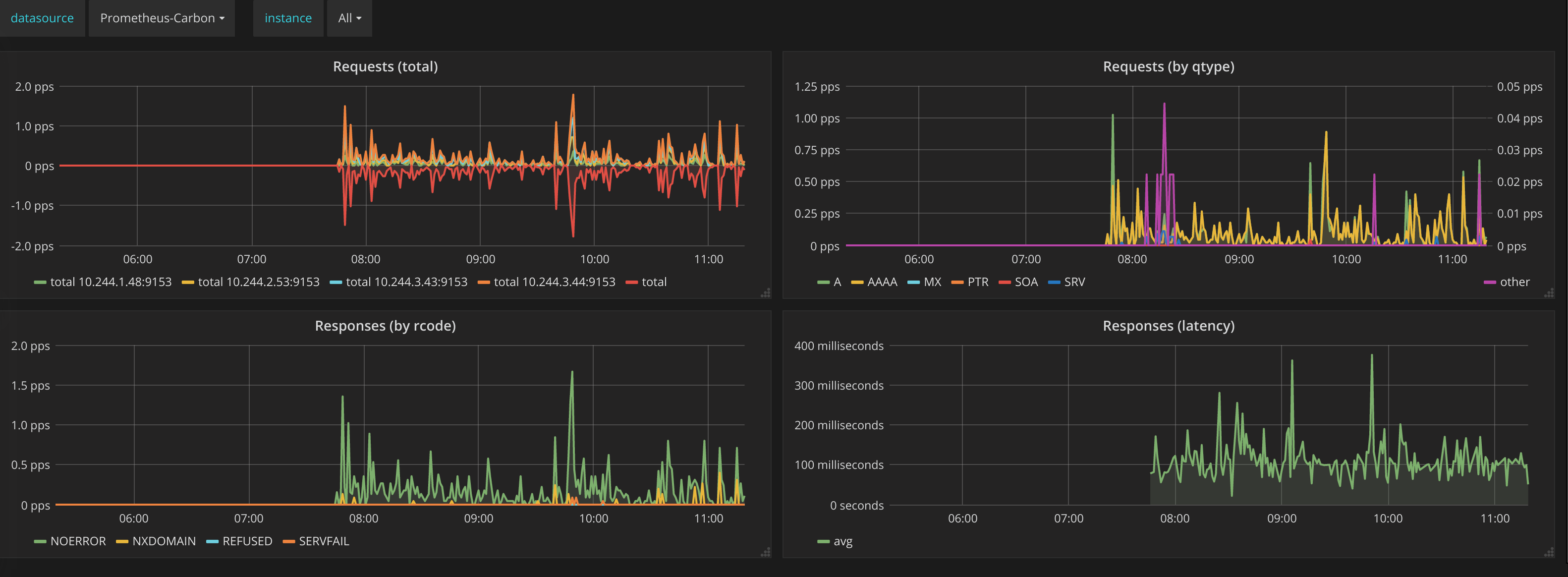

In the end I can see via my monitoring how my CoreDNS is holding up:

-

The code can be found here. ↩︎

-

I ditched Alpine Linux and just use Debian containers. I know Debian and I also use it on my laptop. Uniformity++. ↩︎